How the Distributed Disaggregated Chassis Fulfills AI Cluster Scale Demand

by Will Chang

Modern AI technologies have been developed for decades. Two factors have contributed to the recent leap of Generative AI: huge amounts of data and parallel computing. This blog series will discuss how AI works and how the UfiSpace Distributed Disaggregated Chassis (DDC) solution can enable breakthrough technology with large-scale connectivity and an efficient, easily manageable network fabric.

Generative AI

Generative AI is an Artificial Intelligence system that can be trained to generate new content like text, video, images, and code. The Large Language Model (LLM) is a technique that utilizes Neural Networks to process and analyze data. It simulates how brain neurons communicate and process data to understand the meaning or relationship of these data.

The explosion of online data provides the best material for AI training. However, no single computer could offer the computing power required to process the data due to such large data sets. Parallel processing boosts the development of AI by distributing the data and computing to multiple nodes and then merging the result back to the coordinator for next phase processing.

The ultra-high AI cluster scale

An interesting number that depicts how powerful an AI cluster is. A super cluster with 2,000 NVIDIA DGX A100 systems containing 16,000 NVIDIA A100 GPUs is built and targeting to reach almost five exaFLOPS of computing power when it is complete. A FLOPS measures the computing performance and describes how many floating-point calculations it can do per second. For example, five exaFLOPS mean 5x1018 calculations per second. In other words, one calculation per second that takes 5x1018 seconds to finish is equivalent to 158,443,825,000 years. This is a vast number that seems impossible to count.

The network fabric must be scalable and provide an extra high radix to interconnect such a big-scale AI cluster with memory and storage resources.

How the Distributed Disaggregated Chassis handles scale challenges

Those unfamiliar with the Distributed Disaggregated Chassis (DDC) concept can refer to our specialist blog: What is a Distributed Disaggregated Chassis (DDC)? In a nutshell, the DDC is a new architecture that takes apart chassis components and turns them into individual pizza box switches.

The UfiSpace DDC has proven itself as an effective telecom application since its first commercial deployment in 2018. The same architecture can also fulfill cloud and AI applications, and the introduction of Broadcom Jericho3-AI further optimizes the architecture and cost structure to make it more suitable for AI cluster interconnections.

Here is how DDC fulfills AI cluster scale demand.

Scalability:

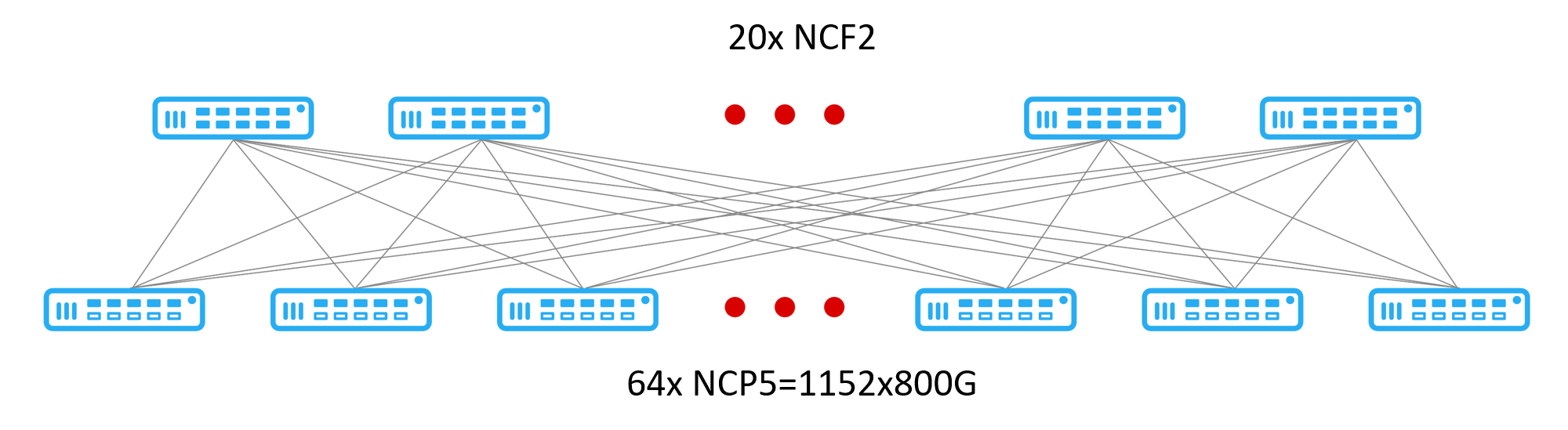

Adapting the CLOS topology, the DDC cluster breaks the limitations of fixed form factor chassis and can scale out on demand. With the latest Broadcom Jericho3 and Ramon 3 platform, it can create a cluster to support the ultra-high radix demand of AI clusters.

Figure 2 Two Layers DDC Topology

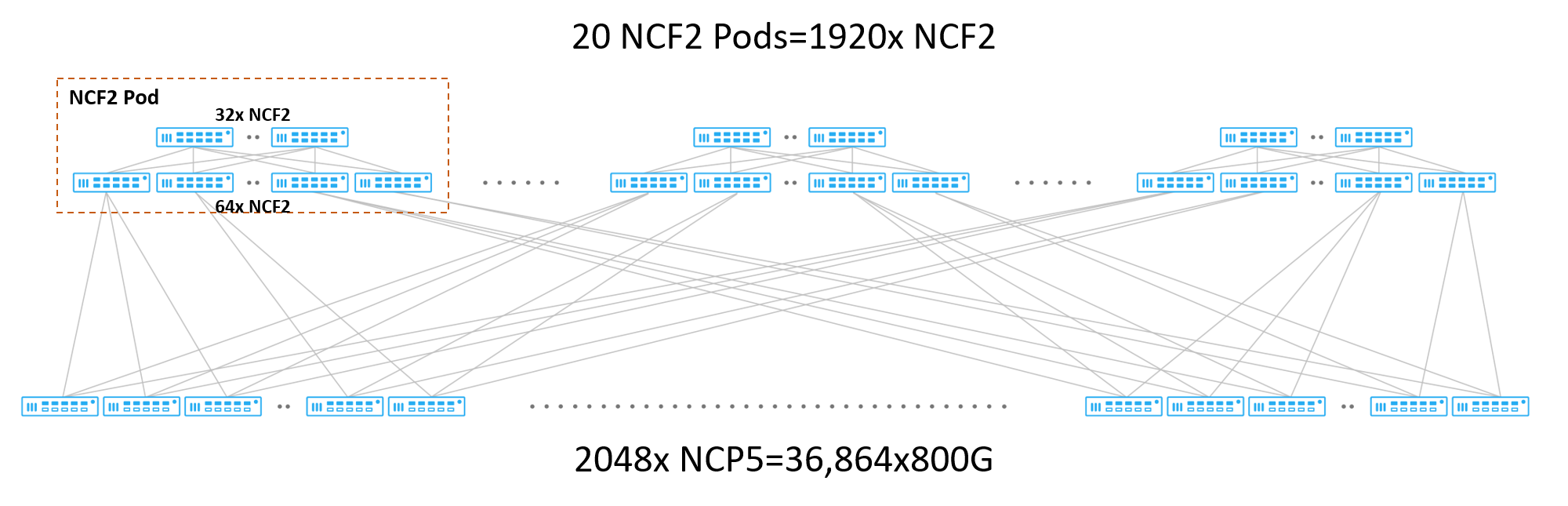

We can further extend the radix by adding one more Ramon 3 layer, using 32 Ramon 3 to connect 64 Ramon 3 to build a Ramon pod. Each Ramon pod may be viewed as a big Ramon device with 2048 fabric ports. Twenty Ramon pods can connect 2048 Jericho 3 devices and provide 36864x800G interfaces.

Figure 3 Three Layers DDC Topology

Manageability:

Manageability is always a concern for such a large network scale. The IT administrators must be sure to correctly configure all equipment and take care of relevant service provisions when adding or removing a node.

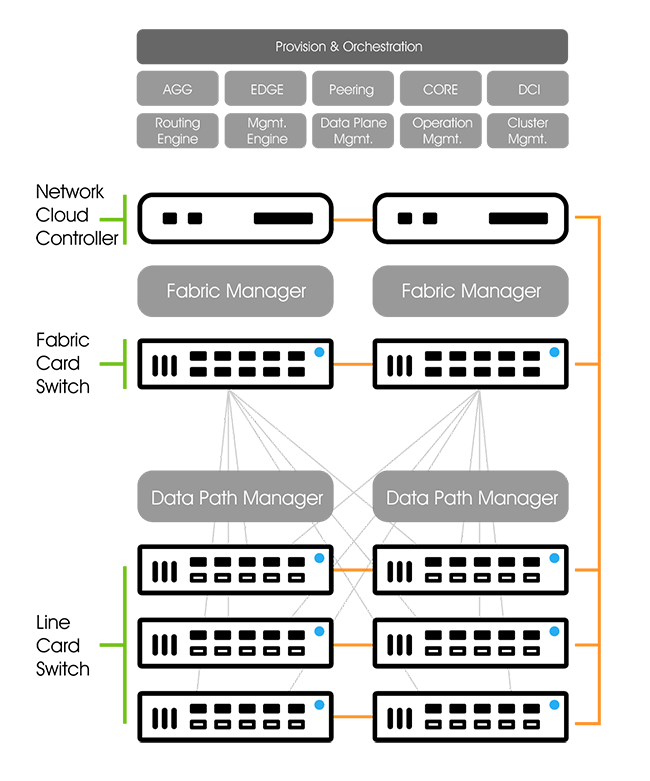

The DDC is known as a virtual cluster because it is managed as a single entity and can be seen as one device. On each box, a standalone NOS is responsible for designated tasks: on the Ramon node (Fabric Card Switch) it is the Fabric Manager and on the Jericho node (Line Card Switch) it is the Data Plane Manager. A network cloud controller (NCC) handles the control plane and management protocols centrally to coordinate the node behavior.

An orchestrator is also available to take care of lifecycle management, service creation and provisioning, and network analytics.

This way, the IT administrator can manage one huge router instead of multiple chassis, easing the maintenance effort. Adding or removing a node is as easy as inserting or removing a line card in a chassis without physical limitations.

Figure 4 DDC is managed as one node

Take away

From a scale perspective, the DDC solution fulfills the ultra-high radix demand of AI clusters while minimizing maintenance effort by managing the DDC cluster centrally through the NCC and orchestrator. This is simply unachievable for other single chassis solutions. Contact us for more details about data center network solutions for traditional DC or AI/ML clusters.

For more information, please contact our sales team.

About the Author

|

Will Chang Will Chang, Technical Marketing Manager at UfiSpace, provides technical expertise and executes marketing campaigns on technical topics. |