What is a Distributed Disaggregated Chassis (DDC)?

by Will Chang

The Challenges for Future Networking

The chassis dominated switch and routing market for decades, it offered multiple sockets for pluggable service modules to provide switching and routing functions or network services. Service providers took advantage of the chassis design to allocate resources on demand.

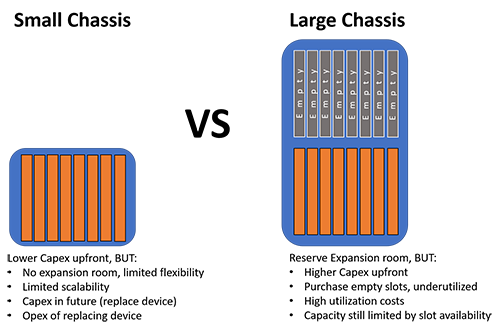

However, as we come upon the 5G era, the chassis becomes a roadblock on the path to enable future service applications and network innovations. According to GSMA, global mobile data usage will grow almost four-fold by 2025. So, when it comes to upgrading switching capacity to fulfill increasing demand, you will be faced with the dilemma on whether to get a smaller chassis or bigger one. To go small, you will end up buying a bunch of small chassis as traffic grows, which means management becomes an issue; to go big, you will waste space and resources on ports you don't need right now.

DDC, A Disruptive Innovation

DDC stands for Distributed Disaggregated Chassis, it breaks up the traditional monolithic chassis into separated building blocks in order to create and scale a switch cluster according to the needs of the network. Physical dimensions are no longer a problem, because you can increase service capacity gradually by adding additional building blocks while keeping CAPEX and OPEX at a minimal level.

Let's take a closer look at the distributed disaggregated chassis to see how each term brings more depth into reinventing the traditional chassis.

Distributed

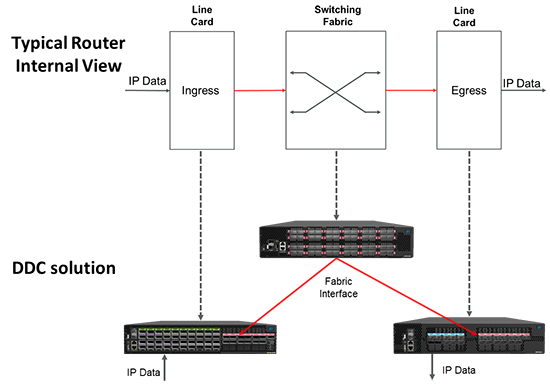

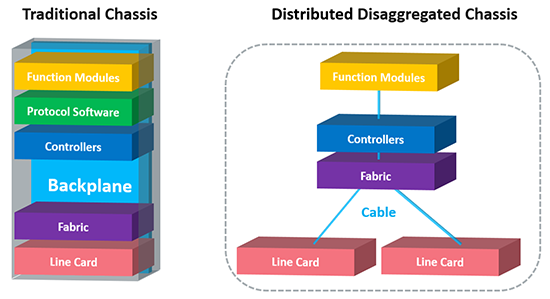

A traditional chassis switching or routing system would typically have several components locked within its chassis, such as: Line cards, Fabric cards, controllers, function modules and protocol software. These components are connected to a single backplane, which is similar to that of a motherboard in a personal computer. Just like a motherboard, there are a limited number of slots available for each component, so expansion is quite limited.

Line cards are what is added when capacity needs to be increased. The fabric cards link the line cards together, so as more line cards are added, the fabric cards would be increased as needed. Of course, more fans and power supplies are needed as well in order to support more line and fabric cards. The other major components of a switching or routing chassis do not affect switching and routing capacity. However, once the chassis runs out of line card ports, getting another chassis means you’ll be repurchasing those components again.

What the “distributed” in DDC does is, we distribute each component into standalone boxes. Each box is equipped with its own power supplies, cooling fans, CPU, chipsets and protocol software pertaining to their specific functions. Each box is 2RU in height and can be fitted onto industry standard 19” racks. This way, when we want to scale up our chassis’ capacity, we only need to add on the components that will improve our capacity, which are the line and fabric cards.

Additionally, instead of a single backplane connecting everything together, we use industry standard QSFP-28 and QSFP-DD transceiver as well as optic cables like DACs, AOCs, AECs, and ACCs. Therefore, our distributed disaggregated chassis breaks through the backplane limitations of a traditional chassis. Each addon are connected with cables instead of plugging onto a “board,” therefore, leading to a very, very large chassis capacity.

Disaggregated

A traditional chassis system would typically come with proprietary software and equipment, which comes at a hefty price. Furthermore, the features and services available are limited to what the vendor can provide. If there is a feature outside of their offering, it takes a lot of resources to get a third-party integration. By that time, something new is available and you’ve just spent all that time and money for old technology.

A disaggregated chassis would separate the software and hardware so that the best of both worlds can come together to maximize the value and benefits for the application scenario. Each of the distributed components are disaggregated white boxes, which are compatible with various open standard software. So, you can choose the software vendor of your liking and maintain the same distributed disaggregated chassis architecture.

The disaggregated white box switch and router architecture also brings another benefit to the table, which is utilizing the same white box hardware infrastructure for multiple positions within the network. Traditional chassis models will vary depending on its use case. Proprietary systems will usually have a specific purpose and will require a different product family or model extension for other applications. However, a disaggregated white box not only has its own CPU and merchant silicon, but it is also compatible with any vendor’s software. The same distributed disaggregated chassis can be positioned in the aggregation network, edge network, core network and even in the data center.

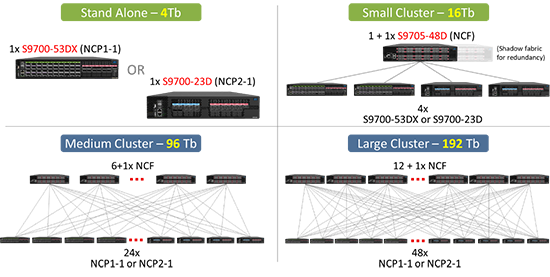

Chassis

This is perhaps the most straightforward part of the DDC. Despite how it looks, the distributed disaggregated chassis is considered one single chassis. Using the DDC architecture, a single switch or router chassis has the potential to be scaled from 4Tbs up to 768Tbs. Not only that, it can be scaled in piecemeal based on the network capacity needs now and in the future.

The Building Blocks of DDC

The distributed disaggregated chassis architecture consists of two major white box components which correspond to fabric and line interface in traditional chassis.

Line Card White Box

Line card white box, also known as a network cloud processor or NCP, serves as the line card module in the distributed disaggregated chassis to provide interfaces to the network. At UfiSpace, we have two types of line card white boxes or NCPs, both of which are powered by the Broadcom Jericho2. One is equipped with 40x100GE service ports (NCP1-1) and the other with 10x400GE service ports (NCP2-1). They both have 13x400GE fabric ports. When just utilizing the service ports without connecting the fabric ports, the NCP1-1 and NCP2-1 can function as standalone units. The fabric ports are used for connecting to the fabric card white box (NCF) in order to build a DDC cluster.

Fabric Card White Box

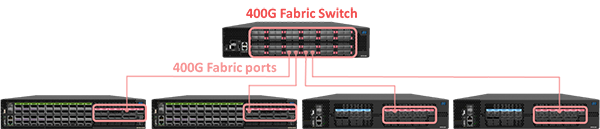

The fabric card white box, also known as a Network Cloud Fabric or NCF, only has fabric ports and works as part of the backplane to connect line card white boxes (NCPs) and forwards traffic between them. The UfiSpace fabric card white box has 48x400GE fabric ports and is powered by the Broadcom Ramon.

Building a DDC Cluster

Although the line card white boxes can work as standalone switches or routers, to unleash the true potential of the distributed disaggregated chassis architecture, the DDC cluster is the way to go. As mentioned above, each line card white box has 13x400GE fabric ports, which are specifically used to connect to the fabric white box.

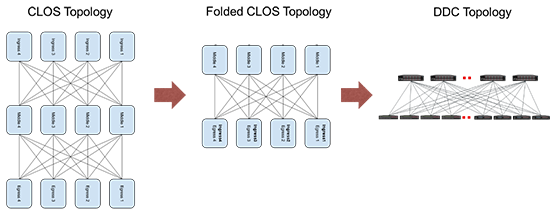

The DDC cluster is built using the CLOS topology by connecting the fabric ports found on each line card white boxes to the fabric white boxes in order to create a full mesh interconnection. In this way, traffic from one node will always have a predictable and fixed number of hops to the other node.

The distributed disaggregated chassis also utilizes cell switching, instead of packet switching between the line and fabric card boxes. Packets are chopped into cells and distributed randomly over the fabric interface, which guarantees reliable and efficient routing.

If you want to serve more network connections due to growing traffic demands, just connect a new line card white box to the fabric white box. When the fabric capacity is not enough, simply add another one. Using this method of expansion, one of our distributed disaggregated chassis can be built into a cluster up to 192Tb service capacity while in non-blocking and redundant configuration.

Benefits of the Distributed Disaggregated Chassis

Lower TCO

With the capability of being able to build small clusters to meet current capacity and scale out as demand grows, the CAPEX can be maintained at the lowest level. With only two building blocks (the line card white box and fabric white box) needed to scale out capacity, configuration efforts are minimized and the management complexity in the back office can be simplified.

Higher Capacity

The service capacity is no longer limited to the physical dimensions of the chassis. Just add additional node to expand the capacity.

Reliability

Redundant designs make sure network availability and that no single point of failure will compromise the service.

Openness

Powered by merchant silicon and compliant to open standards, the distributed disaggregated chassis could be easily integrated with any NOS, automation and orchestration software.

The future of networking is driven by applications and with 5G becoming more widely available, the demand potential can go beyond our imagination. To prepare for uncertainty, an agile and flexible infrastructure is a key. The distributed disaggregated chassis is a solution designed for the next generation of networking to help service providers to take the lead on the race to a 5G era.